Reviewed by Julianne Ngirngir

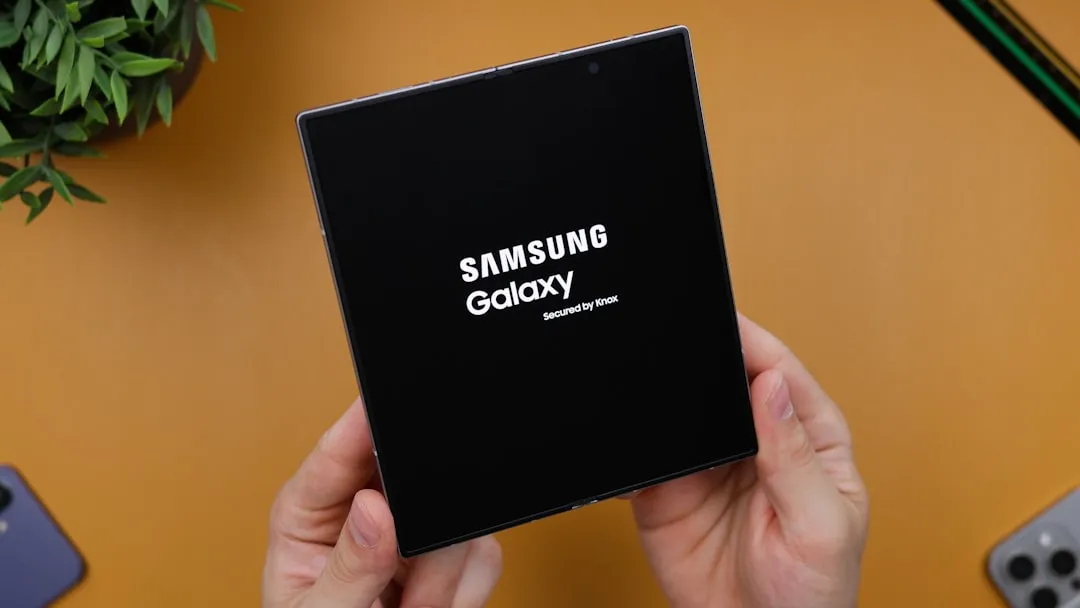

Apple's Vision Pro has been on the market for just over a year, and the spatial computing landscape is already buzzing with anticipation for what comes next. Ming-Chi Kuo shows Apple has at least seven XR projects in development through 2028, signaling that the current $3,499 headset is just the beginning of Apple's ambitious roadmap. With mass production of an M5-powered Vision Pro set to begin in the second half of 2025, we're looking at a fascinating evolution that could reshape how we think about mixed reality computing.

The M5 upgrade: more than just a spec bump

Here's where things get really interesting. The most immediate development on Apple's Vision Pro roadmap centers around a significant processor upgrade that addresses the fundamental computational challenges of spatial computing. While the current Vision Pro runs on Apple's M2 chip, multiple sources indicate that mass production of an M5-powered Vision Pro will begin in the second half of 2025.

This leap from M2 to M5 represents far more than typical annual refreshes. The M5's upgraded Neural Engine is designed to supercharge AI-driven functionalities, such as eye-tracking, gesture recognition, and immersive content creation. Industry analysts predict a launch window between late 2025 and spring 2026, with pricing potentially around $3,500.

The crucial detail here is that Apple may have a version with a bolstered neural engine compared to a standard M4 or M5 chip—a recognition that spatial computing requires fundamentally different processing capabilities than traditional computing. While your Mac processes applications, the Vision Pro must process reality itself in real-time, handling 12 cameras, LiDAR data, and complex spatial mapping simultaneously while maintaining the illusion that digital objects exist in physical space.

Two paths diverging: Pro versus accessible

Apple's Vision Pro strategy reveals a sophisticated understanding of spatial computing's diverse market opportunities, splitting development into two distinct directions that serve fundamentally different use cases. The professional route doubles down on computational power for specialized applications where the current price point makes economic sense.

Apple is considering a Mac-dependent version of the Vision Pro that targets users in high-end applications, like surgery overlays and flight simulators. This approach makes strategic sense when you consider that Apple is working on a Vision Pro that plugs into a Mac, essentially creating a high-powered display and interface system that leverages desktop computational resources. It's Apple's previously canceled concept of augmented reality glasses paired to a Mac, but reimagined as a full headset experience with unlimited processing power.

The consumer accessibility strategy takes a different approach entirely. Apple is considering prices ranging from $1,500 to $2,500 for a cheaper Vision headset—a significant reduction that opens spatial computing to mainstream adoption. This creates a fascinating engineering challenge: maintaining the core Vision Pro experience that differentiates it from Meta's Quest platform while achieving dramatically lower price points. The solution likely involves strategic component choices—perhaps simpler displays or reduced sensor arrays—while preserving the eye-tracking and spatial awareness that define Apple's approach to mixed reality.

Beyond headsets: the glasses revolution

Perhaps the most exciting aspect of Apple's XR roadmap extends beyond traditional headsets entirely, revealing a clear progression toward seamless, everyday wearability. Apple CEO Tim Cook is reportedly 'hell bent' on beating Meta to launching industry-leading AR glasses, with Apple working on smart glasses featuring cameras, speakers, and microphones—but no displays—expected to launch in 2026.

This timeline accelerates rapidly from there. Ming-Chi Kuo's analysis suggests Apple expects to ship more than 10 million AR/VR products in 2027, including a Ray-Ban Meta-style smart glasses product projected for 3 million to 5 million shipments. The roadmap culminates with extended reality glasses featuring built-in see-through color displays coming in 2028 with AI connectivity.

The technical progression here is remarkable: A lighter Vision Air is slated for 2027 with a 40% weight reduction and iPhone processor power, bridging today's immersive headsets with tomorrow's ubiquitous glasses. This represents Apple's long-term vision for spatial computing—moving from isolated, immersive experiences to seamless integration with daily life, where digital information enhances rather than replaces our perception of the physical world.

Software and ecosystem maturation

While hardware roadmaps capture headlines, the software evolution of visionOS represents equally transformative developments that lay the foundation for mainstream adoption. visionOS 2.4 introduces the first set of Apple Intelligence features to the device, including Image Playground for custom image creation and Genmoji for personalized emoji generation—early indicators of how Apple envisions AI-native spatial computing experiences.

The introduction of Spatial Gallery demonstrates Apple's content ecosystem strategy, featuring curated immersive experiences across art, nature, sports, and culture. Content partners include Red Bull, Cirque du Soleil, and Porsche—high-quality, branded experiences that justify premium positioning while building the immersive content library essential for long-term engagement.

More strategically significant are the enterprise developments that could drive professional adoption where pricing concerns are less restrictive. Apple added enterprise APIs for protecting content, multi-user spatial collaboration, federated identity management, and device fleet integration. These capabilities transform Vision Pro from a consumer experiment into a professional platform, while Apple's avatars, called Personas, were upgraded in visionOS 2.6 to support facial nuances, emotions, and hand gestures in meetings—subtle but crucial improvements that make spatial computing feel natural rather than artificial.

Where spatial computing heads next

The Vision Pro roadmap reveals Apple's sophisticated understanding that spatial computing represents not just a product category, but an entire computing paradigm shift comparable to the transition from desktop to mobile. The global XR market is projected to reach $97B by 2028, growing at 32.8% CAGR, and Apple's multi-pronged approach positions the company to capture different segments of this expanding opportunity.

The strategic lessons from the original Vision Pro launch are evident throughout this roadmap. While Apple sold no more than ~500,000 units worldwide, the company is treating this initial release as market research for a much broader spatial computing strategy. The M5 upgrade addresses computational limitations, the cheaper variant tackles market accessibility, the Mac-tethered version serves professional use cases, and the glasses development acknowledges that mass adoption requires invisible integration into daily life.

What's most compelling about Apple's approach is how it anticipates the convergence of AI and spatial computing to create entirely new interaction paradigms. The M5 chip's upgraded Neural Engine enables spatial experiences that understand context, predict user needs, and seamlessly blend digital information with physical reality. We're not just looking at more powerful headsets—we're witnessing the foundation for intelligent environments that adapt to human behavior and enhance rather than distract from real-world engagement.

As we move toward 2028, Apple's seven-project roadmap suggests they're betting that spatial computing will follow the same adoption curve as smartphones—beginning with early adopters willing to pay premium prices, expanding through improved accessibility and use cases, and ultimately becoming as fundamental to daily life as mobile devices are today. The next few years will reveal whether Apple's methodical, ecosystem-focused approach to spatial computing can transform an experimental technology into the next major computing platform.

Comments

Be the first, drop a comment!