Google Research has quietly unleashed something that could fundamentally reshape how we think about creating immersive experiences. The merging of artificial intelligence with extended reality isn't just another tech trend, it represents a potential paradigm shift in immersive intelligent computing, and XR Blocks sits right at the heart of this transformation. There was no public launch event; the release was quiet but has broad implications.

Here's what makes this particularly compelling: while AI development has been supercharged by mature frameworks like JAX, PyTorch, and TensorFlow, the XR space has remained fragmented. Imagine trying to build a smartphone app and having to write your own operating system every single time. Now, Google's cross-platform framework aims to accelerate human-centered AI + XR innovation by bridging that gap with uncommon simplicity.

Breaking Down the Complexity Barrier

Let's be honest, creating intelligent XR experiences has traditionally been too fragmented, creating barriers between a creator's vision and realization. Think about the typical developer journey: master computer vision, nail 3D rendering, integrate AI systems, perfect spatial tracking, then make it all play nicely together just to build a basic prototype. It is like asking someone to become a master chef, electrician, plumber, and interior designer before they can open a restaurant.

XR Blocks flips that script by providing a modular architecture with plug-and-play components for core abstraction in AI + XR: user, world, interface, AI, and agents. Instead of rebuilding the engine, creators can focus on the drive.

What's particularly clever about Google's approach is the foundation. Built on accessible technologies like WebXR, three.js, LiteRT, and Gemini, creators do not need specialized hardware or proprietary tools to get started. You can prototype in a browser on your laptop.

The real breakthrough lies in how the framework provides a high-level, human-centered abstraction layer that separates the what of an interaction from the how of its low-level implementation. This separation frees creators to focus on ideas rather than plumbing, it is the difference between telling a smart home what you want and rewiring the house yourself.

PRO TIP: Google's architectural and API design choices follow three key principles: embracing simplicity and readability, prioritizing the creator experience, and choosing pragmatism over completeness. This is not about the most feature-complete system, it is about something that gets used and takes you from idea to prototype fast.

The Reality Model: Where Ideas Meet Implementation

At XR Blocks' core lies what Google calls the Reality Model, and this is where things get genuinely exciting. It is a system of replaceable modules for XR interaction, centered around Script which operates on six first-class primitives: User and the physical world, Virtual interfaces and context, and Intelligent and Social Entities. Not just technical scaffolding, a new way to think about how humans, AI, and virtual environments meet.

Here is how it works in practice: The Reality Model is realized by XR Blocks's modular Core engine, which provides high-level APIs for three crucial areas. First, the perception and input pipeline includes camera, depth, and sound modules that continuously feed and update the Reality Model's representation of physical reality. Think of it as sensory nerves that keep the system aware of the world around the user.

Second, the AI module acts as a central nervous system, providing functions (.query,.runModel) that make large models an accessible utility. No wrestling with custom integrations, just call the functions like any other tool.

Finally, the toolkit provides a library of common affordances and helps render interfaces and complex visual effects. In other words, the visual building blocks are ready to go.

Here is what makes this approach feel new: the framework allows user prompts like "When the user pinches at an object, an agent should generate a poem of it" to be directly translated to high-level instructions. We are moving from coding interactions to describing them in natural language. Imagine the creative burst when the gap between imagination and implementation gets that thin.

XR-Objects: Making Every Surface Intelligent

The most compelling demonstration of XR Blocks' potential comes through XR-Objects, and it feels like a glimpse of how we will interact with the physical world next. XR-Objects is an innovative augmented reality research prototype system that transforms physical objects into interactive digital portals using real-time object segmentation and multimodal large language models.

This is not just overlaying labels on top of things we point at. XR-Objects creates contextual intelligence that understands what you are looking at and why you might want to interact with it, then surfaces relevant capabilities in real time.

The technical implementation is elegantly simple, which makes it all the more impressive. The XR-Objects system leverages real-time object segmentation and classification combined with Multimodal Large Language Models to create dynamic, contextual interactions with virtually any object in view. Under the hood, Google's approach utilizes MediaPipe for object detection, using mobile-ready convolutional neural networks for real-time classification, while the system anchors AR menus with 2D bounding boxes and depth data, then converts them into precise 3D coordinates via raycasting.

In practical terms, XR-Objects can enhance everyday interactions by enabling digital functions for analog objects, such as turning a pot into a cooking timer. Point your device at a kitchen pot and it becomes a smart cooking timer. Look at a book and you can pull up reviews, related titles, or have the system read passages aloud.

The user study results speak for themselves: participants using XR-Objects completed tasks significantly faster compared to a baseline, with a 31% reduction in task completion time. That is not a tiny boost, it is the kind of efficiency jump that changes how people work and interact with their environment.

Market Momentum and Real-World Applications

The timing for XR Blocks could not be better, and the numbers tell a clear story. The AR market is climbing at a CAGR of 33.5% and is expected to reach over $300 billion by the 2030s, while active mobile AR devices are predicted to reach 1.19 billion worldwide by 2028. Even more, the global XR market is projected to reach $85.56 billion by 2030 with a 33.16% CAGR.

What is really driving that growth is not just hardware leaps or flashy demos. It is the fusion of AI and XR into useful, everyday tools, and XR Blocks makes that fusion workable for creators who could not navigate the complexity before.

Take medical training. In medical training, AI-powered VR simulations provide realistic patient interactions, allowing practitioners to hone their skills in a risk-free environment. With XR Blocks, educators can rapidly prototype training scenarios without assembling a specialized engineering team. AR and VR are used for surgical simulations, medical training, and therapy, with integrated intelligent object interaction providing real-time device guidance. Imagine XR-Objects recognizing instruments and offering contextual guidance or safety cues.

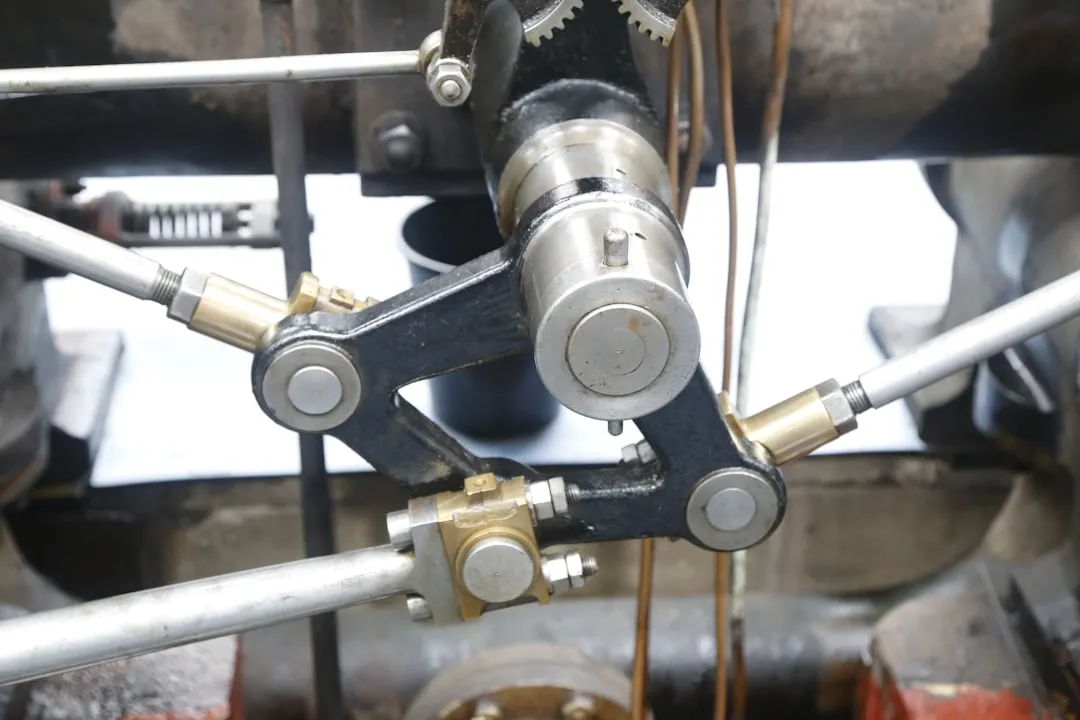

Industrial settings show the same promise. Interaction remains tricky when workers wear gloves or need hands-free operation, and XR-Objects style object interaction can assist. RealWear's Navigator® 500 and 520 headsets integrate AI to assist industrial workers with hands-free access to schematics, guided workflows, and real-time computer vision analysis. Now picture how much faster deployment gets with XR Blocks' simpler development path.

Where Innovation Meets Accessibility

Perhaps the most significant aspect of XR Blocks is its commitment to democratizing XR development, and this is where the paradigm shift really shows. The framework demonstrates its utility through a set of open-source templates, live demos, and source code on GitHub, while frameworks like XR-Objects are open-source, fostering innovation across industries.

This open approach mirrors what sped up AI adoption, making powerful tools available to a wide community of builders. Think of how web development exploded when frameworks like React and Vue put complex interactions within reach of everyday developers.

The educational implications are immediate. AI integration in VR is transforming corporate training and education by providing adaptive learning environments, where AI can analyze a learner's interactions and tailor the content to suit their learning style, thereby enhancing the effectiveness of the training programs. With XR Blocks, educators and trainers can build these adaptive experiences without deep technical expertise.

AR improves learning by overlaying information that helps students visualize concepts and provides interactive guides in classroom settings. Picture a biology teacher using XR-Objects to turn classroom items into interactive specimens, or a chemistry instructor conjuring instant molecular visualizations from everyday materials.

Retail is shifting too, pulled forward by changing habits. AI-powered virtual stores offer personalized product recommendations and interactive features that mimic the in-store experience. As Gen Z's spending power grows, virtual stores are gaining traction, shaped by gaming influences and boosted by AI advancements. XR Blocks can help small retailers spin up polished AR shopping moments without enterprise-sized budgets.

The Convergence Catalyst We've Been Waiting For

XR Blocks is more than another development framework, it is a catalyst for the long-promised convergence of AI and XR. The convergence of AI and VR/XR technologies is revolutionizing the way we interact with digital environments, and Google's approach gives more creators a way in.

Here is why this moment matters: As AI continues to evolve, we can anticipate even more sophisticated and personalized virtual experiences that will transform industries and set new standards for immersion and interactivity. The difference with XR Blocks is access. These experiences do not need a massive team of specialists, they can be built by small teams with sharp ideas.

Google's Android XR platform, combined with XR-Objects, aims to deliver seamless AR experiences, while the open-source nature of the tools ensures that innovation can happen anywhere, by anyone. This kind of accessibility has sparked creative booms before, from the rise of web frameworks to the indie app wave.

PRO TIP: The future of immersive computing is not just better hardware or bigger models, it is making the creation of intelligent, responsive, human-centered experiences as natural as describing what you want to build. XR Blocks moves us closer to that vision, where imagination and implementation sit side by side.

The implications for how we work, learn, and navigate digital information could be profound. We might be heading toward a world where every surface is interactive, every object is intelligent, and every creator can build the immersive experience they imagine. XR Blocks is not just launching a framework, it is opening a new era of accessible innovation at the intersection of AI and extended reality.

Comments

Be the first, drop a comment!