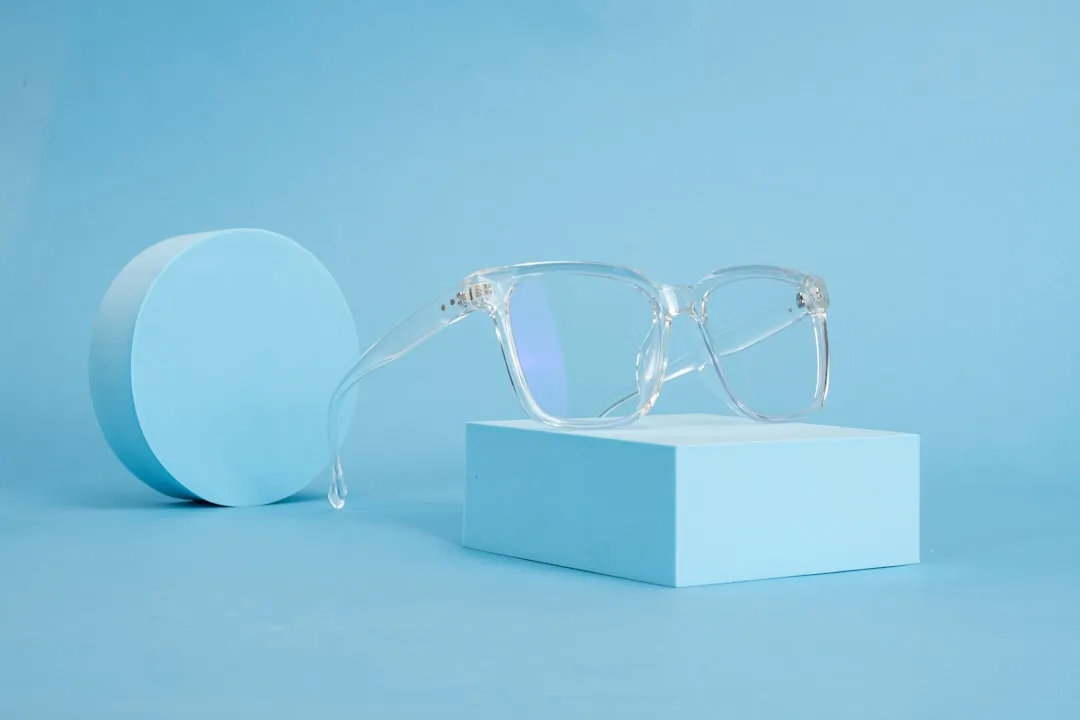

Meta's Ray-Ban Display glasses represent what many consider the first genuinely compelling smart glasses experience since the category emerged. The Meta Ray-Bans have become "the most successful AI hardware product in the past three years," with enough momentum to dominate 80% of Mark Zuckerberg's September keynote. The new Display variant combines a full-color waveguide system with neural interface control, what ZDNet calls breakthrough technology for "the post-smartphone era." To show it off, Meta is opening pop-up shops across major cities, bringing hands-on experiences to people who want a peek at the future of personal computing.

What makes the Display glasses a game-changer?

The specs tell a clear story. The Meta Ray-Ban Display glasses feature a 600x600-pixel full-color display that delivers 5,000 nits of brightness, brighter than any smartphone or even the Apple Watch Ultra. Midday sun on a city sidewalk, still readable.

The display approach solves several problems at once. Meta is using a liquid crystal on silicon (LCOS) display positioned off to the side in the right lens, so it stays out of your natural line of sight while keeping text and icons crisp. And for anyone who remembers Google Glass side-eye, the waveguide display is undetectable from the outside, even when showing full-color images.

Weight shows the same restraint. At 69 grams total, they are heavier than the audio-only Ray-Bans yet still in the daily-wear comfort zone. Not featherweight, not a brick.

The neural interface breakthrough

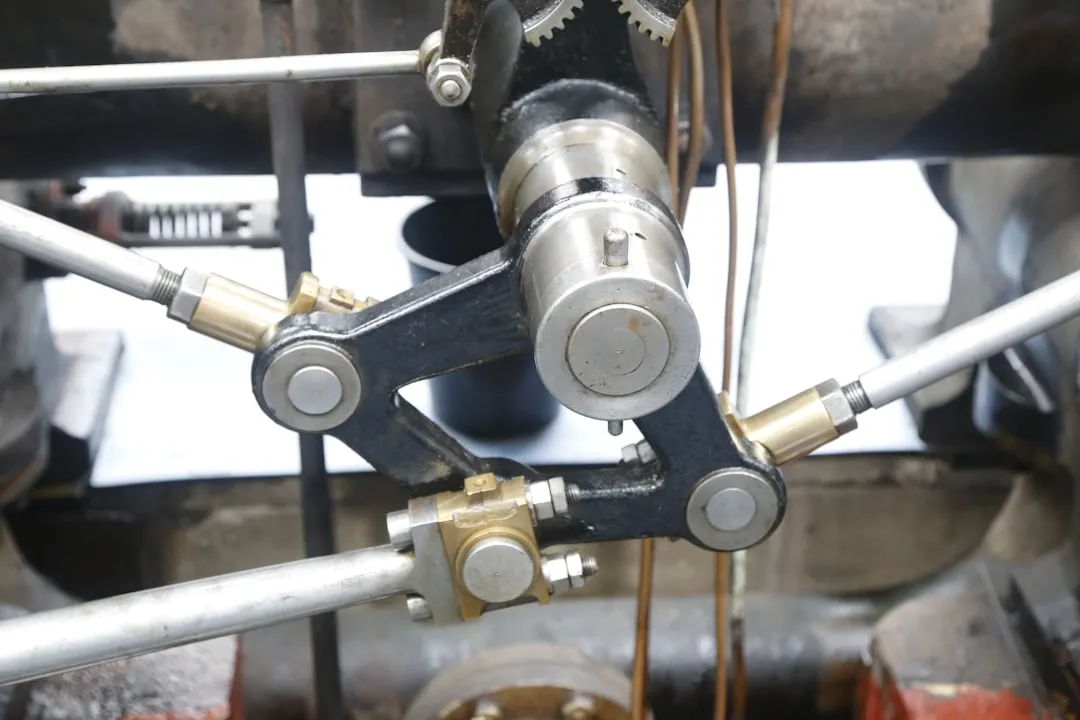

The showstopper is not only the display, it is the Meta Neural Band that ships with every pair. Zuckerberg called the wristband "the world's first mainstream neural interface," and early testing backs that up. The EMG wristband reads tiny muscle signals so you can navigate with pinch gestures, swipes, and wrist twists, no taps or air pokes.

The learning curve is quick. Within 5-10 minutes of use, testers were successfully pinching with thumb and middle finger to go back, swiping across screens, and using pinch-and-twist gestures to adjust settings like volume. Reading electrical muscle activity in real time is no small feat, yet it feels simple.

This band stands on deep groundwork. The Neural Band builds on four years of electromyography research with nearly 200,000 participants, so it works out of the box for most people despite differences in muscle signals. The hardware is tough too, using Vectran, the same material used in Mars Rover crash pads, with 18 hours of battery life and IPX7 water resistance rating.

It also fixes an awkward truth about smart glasses. Reaching up to poke your face looks odd in public, and voice commands are not always welcome. The Neural Band gives you invisible, intuitive control anywhere.

Smart features that actually matter

The Display glasses run a full operating system that blends the visual UI with AI. The feature set includes live captions with conversation focus, AI-powered visual feedback, WhatsApp messaging, and the ability to capture and preview photos/videos directly on the display.

Conversation focus stands out. The glasses can do live captioning that transcribes only the words of a focused person while ignoring conversations from other people in the same room. The trick uses the directional mic array, and the result is real accessibility, not a gimmick.

For navigation, you get turn-by-turn walking directions in 28 cities at launch, plus video calling where friends see what you see. Ask for a recipe and the AI arranges answers into swipeable cards, step by step, right in your field of view.

Battery life is solid for a first wave. Expect 6 hours of mixed use with an additional 30 hours from the collapsible charging case. Storage is covered too, 32GB holds up to 1,000 photos and 100 30-second videos.

The pop-up experience strategy

Meta’s retail plan matches the product’s premium vibe and the reality that AR needs hands-on time. You cannot just pre-order, customers must book a demo at retail partners like Best Buy, LensCrafters, Sunglass Hut, or Ray-Ban stores before purchasing the $799 glasses.

That demo-first rule also helps with supply and returns. The company plans to manufacture only 150,000-200,000 units over the next two years, so think limited run, early adopters only.

The pop-ups seal the strategy. The Meta Lab pop-up shops in Las Vegas, Los Angeles, and New York play multiple roles. The Los Angeles location expands to over 20,000 square feet, serving as a flagship experience center, while the Las Vegas shop opens at the Wynn on Oct 16, with the Fifth Avenue New York location set for Nov 13.

Scarcity is doing its thing. Demo appointments in many major cities are already booked through mid-October, which builds buzz and keeps quality high.

Where smart glasses go from here

The Meta Ray-Ban Display looks like a pivotal moment for wearables. At $799, these glasses target tech enthusiasts and professionals, people who like to live on the sharp edge of new platforms.

A quick reality check. If you buy these glasses, you probably shouldn't think of them as an investment you'll wear for 2-3 years. The tech is moving fast, and buyers should expect to upgrade within a year as the next generation leapfrogs these capabilities.

That is fine for an emerging category. The Display glasses chip away at the problems that stalled smart glasses after Google Glass. They look normal, the display stays invisible to others, the interface feels natural, and the features deliver everyday utility instead of demo sparkle.

Meta’s pop-up plan suggests confidence in long-term retail. Temporary stores double as testing grounds for demand and operations, a possible path to permanent smart glasses showrooms. Put the refined hardware together with the neural control and a savvy retail rollout, and it looks like Meta learned from past missteps and wants the lead in the next wave of personal computing.

The Neural Band, on its own, is a big step in human-computer interaction with implications far beyond glasses. As Meta positions this as "the start of the next chapter, not only for AI glasses, but for the future of wearable technology," the way we use every device on us could change. It looks more like the beginning than the finish line.

Comments

Be the first, drop a comment!