Meta just fired a massive shot across the bow in the smart glasses arms race. Honestly, the competition should be terrified. The company's latest lineup, the updated Ray-Ban Meta Gen 2, the new Oakley Meta Vanguard, and the Meta Ray-Ban Display, feels like a quantum leap that resets expectations for wearable AR.

With more than 2 million pairs of Ray-Ban Meta smart glasses sold since launch, and distribution running through EssilorLuxottica's physical stores and e-commerce sites, Meta is not just playing in this space, it is running it. The real kicker? They are willing to heavily subsidize their hardware, similar to their Quest headsets. That choice sets up a long game competitors can’t match, establishing the foundation for a new computing paradigm rather than a one-off hardware splash.

The display breakthrough that changes everything

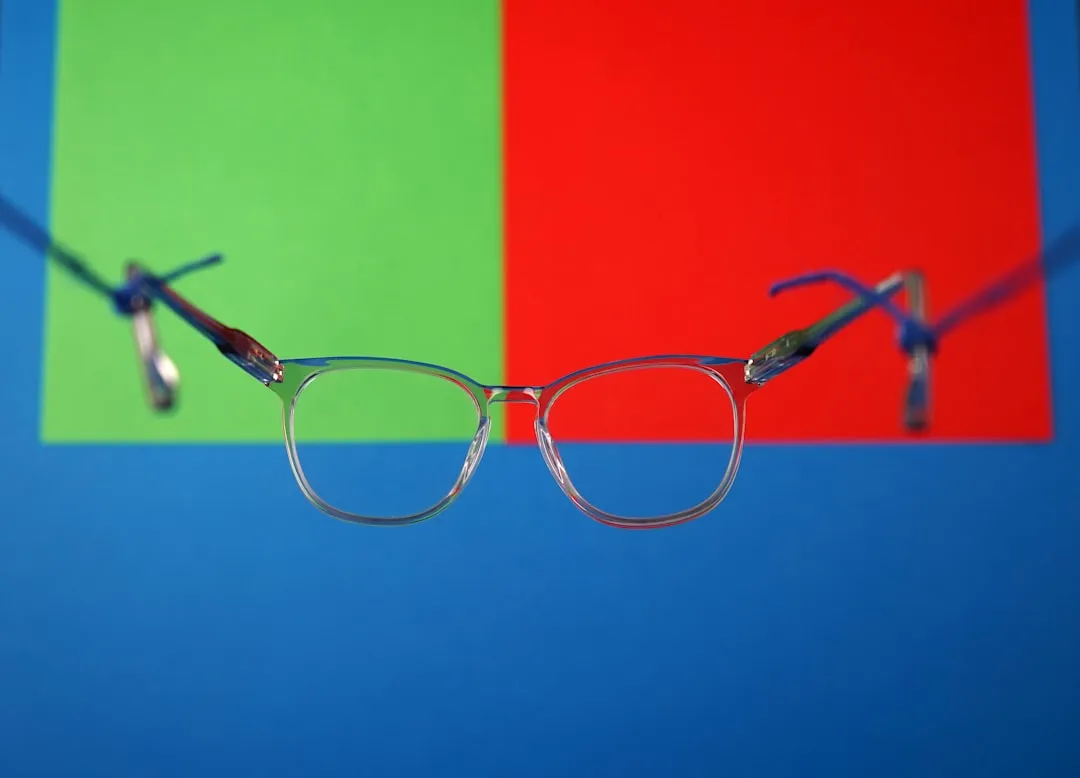

Here is where the technical flex becomes obvious. The Ray-Ban Display is the first smart glasses from Meta to include a display, and it solves problems that have haunted AR for years. We are talking about a 600×600 pixel full color screen that hits up to 5,000 nits of brightness, brighter than any smartphone or even the Apple Watch Ultra.

Specs are only half the story. The display uses liquid crystal on silicon, and it stays remarkably transparent, with only 2 percent light leakage, so people cannot see it when they look at you. That sidesteps the social weirdness that doomed early attempts like Google Glass, the whole "Glasshole" problem.

What makes it work is the experience around the screen, not just the screen itself. The in lens display is crisp with a wide field of view, and it adapts brightness for dim rooms or blazing noon sun. It sits slightly off to the side so it does not hijack your vision, appearing in your periphery until you call it up. Less distraction, more companion.

AI integration that creates synergistic capabilities

The display turns powerful once Meta's AI moves in. The glasses integrate live captions, real time translation, visual responses, private messaging, live video calling, screen sharing, turn by turn navigation, a camera viewfinder with zoom, and music playback controls. The magic is how these pieces click together on the lens.

Picture it. The AI handles real time language translation, object recognition, and contextual assistance based on what you are looking at, with translation running across more than 20 languages. The display overlays the translation, drops navigation directions into your view, and flashes object info right when you need it.

Even task flow gets rethought. The glasses can find a recipe and turn it into swipeable cards, so a vague request becomes a clear set of steps. They are better at locking onto the speaker's voice in noisy rooms, and can identify the person in front of you as the one to focus on, then transcribe only their words while muting the rest. Not just neat party tricks, genuinely useful, and better because it is visual.

The neural band revolution multiplies control precision

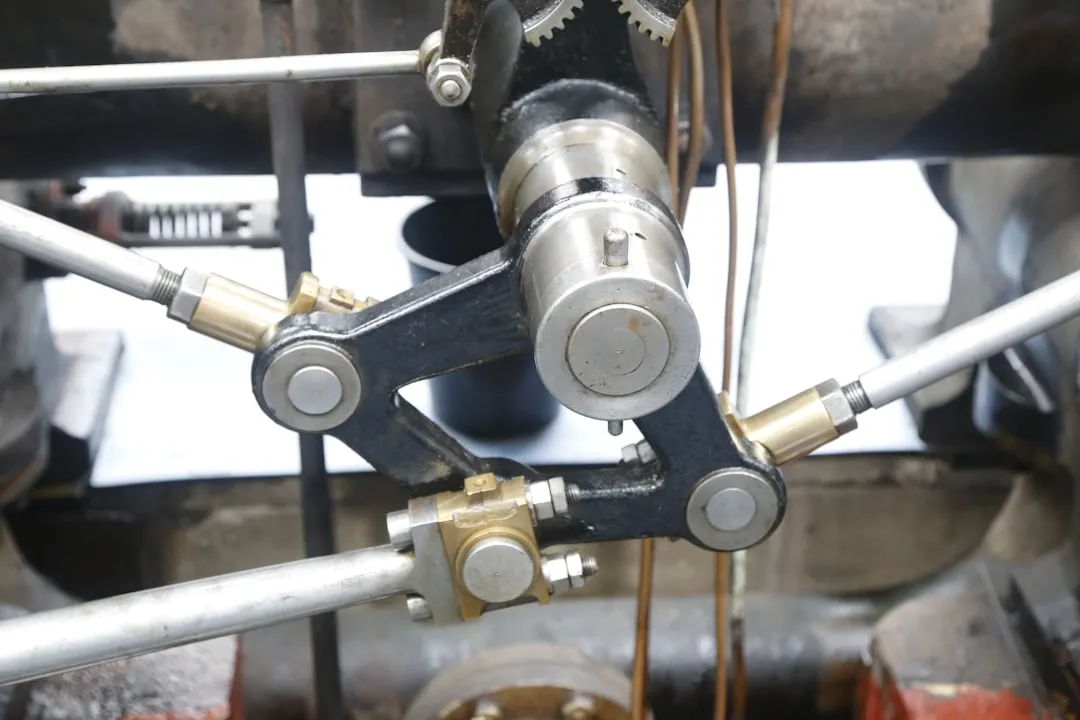

Here is the real separation. The Neural Band turns the display and AI into a cohesive computing platform. Mark Zuckerberg called it "the world's first mainstream neural interface". This EMG wristband detects electrical signals from wrist muscle contractions using surface electromyography, translating your intended gestures into commands even before you move.

The precision lifts everything. The band reads forearm impulses, then machine learning maps them to gestures, with high recognition rates reported in internal testing after a short adaptation period (public detailed accuracy figures not disclosed). In practice, you can navigate menus, type, pinch to select, swipe across screens, or write letters that become words for messaging.

The synergy shows up fast. Early testers say that within 5 to 10 minutes they were pinching thumb and middle finger to go back, swiping between screens, and pinching forefinger and thumb then twisting the wrist to change settings like volume. With up to 18 hours of battery life and IPX7 water resistance, it is not a lab demo. It is a daily driver that makes the display and AI feel effortless.

Why the competition should be worried: market dominance through technical superiority

Timing matters. Android XR glasses with displays are not expected until late 2026, possibly 2027. Meta's Ray-Ban Display glasses launched on September 30, 2025, at 799 dollars. That is not a head start, it is an entire generation in a market where momentum snowballs.

The optics help, literally. Meta's geometric waveguides create a more natural look compared to refractive waveguides shown in Android XR demos, which means fewer techy vibes and better odds of mass adoption.

The numbers back it up. Meta has sold more than 2 million pairs of its Ray-Ban glasses, real traction beyond early adopters. The business engine is locked in place too, with a 3.5 billion dollar stake in EssilorLuxottica. Sales of Ray-Ban Meta smart glasses tripled in the first half of 2025 versus the same period last year, and Meta is planning to sell 10 million units annually by 2026. That scale lets Meta subsidize hardware to grow the ecosystem, a strategy many rivals simply cannot mirror.

The privacy elephant threatens market adoption

Privacy is the giant in the room. The glasses include always on cameras and microphones, a risky mix given Meta's history. That track record has people on edge, and the product raises the stakes.

Data handling is the sticking point. When users shoot photos or video, the content goes to Meta's cloud to be processed via AI, and Meta's own site says photos processed with AI are stored and used to improve Meta products and will be used to train Meta's AI. That pipeline changes what the company can learn, then how it can influence.

Social friction is already visible. Stories of people wearing smart glasses in private settings, salons, or venues with no photo rules show how fast lines get crossed. The small LED that signals the camera is on has drawn criticism from European privacy regulators, a sign that hardware cues alone will not fix social acceptance.

Real world use can create awkward moments and legal headaches in private or sensitive spaces. These concerns could slow Meta just as the tech reaches mainstream readiness.

What this means for the future of computing: cognitive augmentation becomes reality

Mark Zuckerberg says people without AI enabled smart spectacles will face a "pretty significant cognitive disadvantage" compared to those who wear them. After seeing display, AI, and neural control working in concert, that prediction feels less like sci fi and more like a preview.

The specs are already livable. The glasses deliver up to 6 hours of mixed use battery life, they support prescription lenses between plus 4.00 to minus 4.00, and early reviews have been broadly positive, calling out the crisp display, wrist gesture controls, and AI features. Not prototypes, not vapor, but numbers that fit daily life.

The roadmap is clear. Meta frames smart glasses and VR as stepping stones toward full AR glasses, which it expects to ship in 2027. The long term goal is to replace traditional apps with AI driven services, shifting how we interact with information through integrated visual, AI, and neural interfaces.

Will it all land smoothly? Maybe not. Until manufacturers address data concerns and craft a more thoughtful user experience, these glasses could stay niche, more cautionary tale than must have. The tech is ready to change how we compute. Society's comfort with pervasive AI surveillance is the limiting factor.

For now, Meta holds a commanding technical and market lead in what could be the next major computing platform. The question is not whether Meta can build great smart glasses, they already have. The question is whether we can build the social, legal, and ethical guardrails to live with them. The competition has work to do on both sides.

Comments

Be the first, drop a comment!