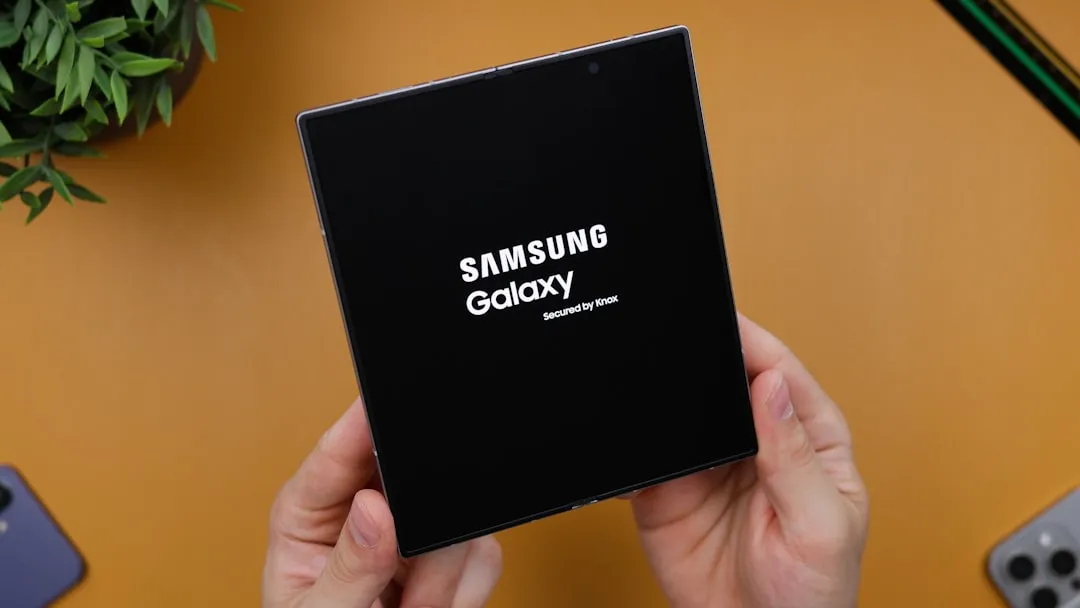

When Samsung's Galaxy XR finally launched after years of speculation, it immediately positioned itself as a formidable challenger to Apple's Vision Pro dominance. The timing could not be more strategic, Samsung unveiled the headset just as Apple refreshed its own offering with the M5 chip. What follows is a clash of philosophies: raw processing power versus AI-first experiences, two different bets on the future of spatial computing.

You might be wondering why this matchup feels so compelling. Samsung's entry brings clever, user-facing smarts that poke at Apple's premium stance, while Apple's M5 refresh doubles down on computational excellence. The real question is not which headset wins a spec sheet. It is which approach makes spatial computing useful for real people.

The broader implications for XR adoption

These approaches point to different daily realities. Samsung's AI-first strategy imagines assistants handling spatial chores with minimal fuss. Apple's performance-first play unlocks heavier apps and smoother behavior when every millisecond counts.

The global smart glasses shipments surged by approximately 110% in the first half of 2025, a clear sign that interest in wearable AR is rising. Some forecasts for the smart glass (switchable/dynamic-glass) market project about $11.74 billion by 2030; this is a different market from wearable smart glasses, with steady growth expected. The tug-of-war between Samsung's AI-driven approach and Apple's performance philosophy will shape developer priorities and consumer expectations about what spatial computing should actually do.

We are watching two big bets play out in real time. Samsung thinks intelligence will make XR feel natural and accessible. Apple thinks raw power will open entirely new categories. Both could be right. Or the winner could blend the two. Either way, the pace feels familiar, like the early smartphone years when everything moved fast and nothing felt settled.

Comments

Be the first, drop a comment!